A Custom LLM Journey - Starting with Neurosurgical Intelligence.....

Tech news release (January 2024): We here announce our beta Generative Retrained Syntax (GRS)-AI, a model aligned specifically for neurosurgical consultation at this time. One of the pioneers in incorporating custom AI in surgical technologies, such as the SmartForceps System, we recognize the potential of large generative models in research and in the operating room or in medicine at large. Our goal is to build a reliable system that can bring better standardization of care while being safe. With the model presently undergoing expert assessment and validation, we are pleased to share that the quality of the response exceeds other models in the area. We are committed to advance the field of task-specific large language models in neurosurgery, neurology and beyond.

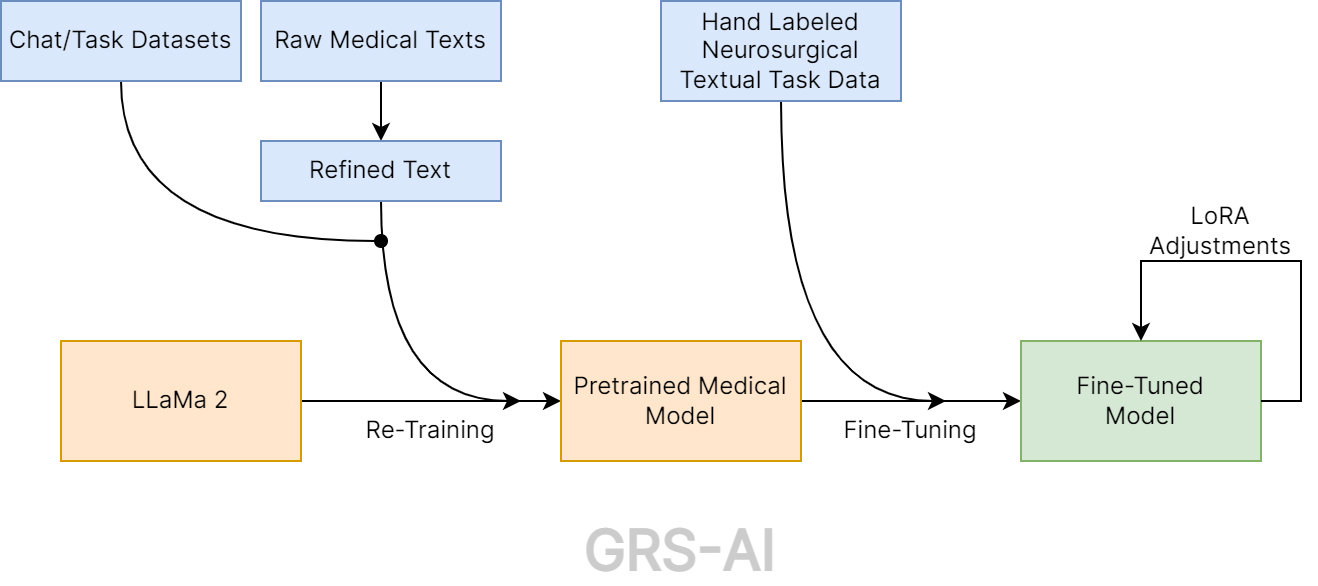

The model was trained using a multi-stage procedure. On top of the 13B LLaMa 2 architecture, a ‘second pre-training’ with 40B tokens for 2 epochs was performed for a total of 80 billion training tokens. About 30-40% of this textual data was medical, with remaining data from open-source chat datasets to allow the model to retain some of its original capabilities. The data was extracted from highly curated medical journals with high citation indices, and select textbooks. Novel PDF clustering/mining algorithms were used to extract paper data. Natural language processing techniques were used to augment and clean this data into textbook quality.

A supervised fine-tuning step was performed with ~5,000 data points, the majority being human labeled data, to achieve strong stability and task alignment. Finally, small adjustments to formatting and model response were performed using low-rank adapters on specialized alignment datasets. The model has been rigorously evaluated and refined by input from world-class neurosurgeons, challenged multiple times for accuracy and acceptability of response, and productionized through several iterations (Illustration below).